OK, so that was an interesting one. I’ve had a dedicated server at OVH for years and years and I’ve always run VMware ESXi for virtualization there because I didn’t really know what I was doing and vSphere Client was a pretty nice GUI for managing things. Over the years as I’ve gotten more comfortable with Linux, the limitations of ESXi were more and more apparent. It’s really designed to be used as part of the whole VMware ecosystem and if you aren’t using their entire platform, then there’s really no reason to go with ESXi.

One of the biggest annoyances is the lack of visibility into the hardware. You need to use either SNMP or the VMware API to monitor anything and honestly, I just prefer a decent syslog.

Having migrated my home network to a Proxmox cluster some weeks ago, I figured a standalone instance at a hosting provider would be a piece of cake. There were definitely parts that went incredibly smoothly based on the experience from last time–converting the VMDKs to QCOW2s was as easy as could be. (qemu-img convert -f vmdk -O qcow2 disk.vmdk disk.qcow2) The converted images were not only smaller, but worked without any tweaking as soon as they were attached to their new KVM homes.

However, there was one serious speed bump that I didn’t anticipate at all: the /etc/network/interfaces file was missing on the host. Somehow this machine was getting its OVH IP address and I could not figure out where it was coming from. (Spoiler: If you guessed systemd-networkd, you win!)

Never having used systemd-networkd, I figured it couldn’t be that hard to get things up and running and since it’s a massive deviation from the basic Proxmox installation it seems like something OVH would’ve documented somewhere. Also note that Proxmox depends on /etc/network/interfaces for a lot of its GUI functionality related to networking, e.g., choosing a bridge during VM creation.

So the first thing I did was check out /etc/systemd/network/50-default.network. Looks more or less like a network interface config file with all of the relevant OVH details included. OK, but it’s also assigning the address to eth0 and if I know anything about Proxmox, it’s that it loves its bridges. It wants a bridge to connect those VMs to the outside world.

That gave me a place to start.

Setting up a bridge for VMs

Fortunately, plenty of people out there have also set up bridges in their various flavors of Linux with systemd-networkd, so there was enough documentation to put together the pieces.

- A netdev file creating the bridge device (vmbr0 in this case)

- A network file assigning eth0 to the bridge (think

bridge_portsin/etc/network/interfacesterms) - A network file configuring vmbr0

Creating a netdev file for the bridge

[NetDev] Name=vmbr0 Kind=bridge

Short and sweet.

Creating a network with the bridge

[Match] Name=eth0 [Network] Bridge=vmbr0

Also short and sweet.

Configuring the bridge interface

Everything from the [Network] section on was copied and pasted from OVH’s original /etc/systemd/network/50-default.network.

[Match] Name=vmbr0 [Network] DHCP=no Address=xx.xxx.xx.xx/24 Gateway=xx.xxx.xxx.xxx #IPv6AcceptRA=false NTP=ntp.ovh.net DNS=127.0.0.1 DNS=213.186.33.99 DNS=2001:41d0:3:163::1 Gateway=[IPv6 stuff] [Address] Address=[some IPv6 stuff] [Route] Destination=[more IPv6 stuff] Scope=link

Seems simple enough… But wait, what do I do about the original configuration for eth0? Do I leave it in place? It could conflict with the bridge. Do I need to set it to the systemd equivalent of “manual” somehow? These are questions I could not find a simple answer to, so I just rebooted.

When the system came back up (with 50-default.network still in place), the bridge had been created, but it was DOWN according to ip addr. eth0 was UP and had the public IP address. Guess that answers that, need to remove 50-default.network.

# ln -sf /dev/null /etc/systemd/network/50-default.network

Another quick reboot and we should be back in business. But no, the server didn’t come back up. Rest in peace. I barely knew ye…as a Proxmox server. Any guesses as to what’s missing? If you said, “You needed to clone the MAC of eth0 onto vmbr0.” you are absolutely correct. Had to modify the netdev file accordingly:

[NetDev] Name=vmbr0 Kind=bridge MACAddress=00:11:22:33:44:55

OK, one more reboot…and this time we’re finally working. System came up and was still accessible, vmbr0 had the public IP, everything was looking good.

Creating a VM

Since the disk image doesn’t know anything about the specs of the VM, I had to recreate the VMs by hand. As I’m not a glutton for punishment, I used the Proxmox web interface to do this. But wait, remember how Proxmox uses /etc/network/interfaces and not systemd-networkd? When it came time to add a network adapter to the VM, there were no bridges available and the bridge is a required field. I ended up having to select No Network Device to get the web interface to create the VM. Then I logged into the server via SSH and edited the VM config file manually (while I was in there, I also pointed it at the converted QCOW2 instead of the disk created with the VM):

... scsi0: local:100/old_disk.qcow2 net0: virtio=00:11:22:33:44:56,bridge=vmbr0

If you haven’t used OVH before, you might think, “OK, we’re done. Neat.” But wait, there’s more. OVH uses a system where your VMs can’t just start using IPs that are allocated to you, you need to register the MAC address for the IP address. And in most cases, this is super-simple and you don’t need to do anything more than paste the MAC address and be on your way. Unfortunately, in my case, these VMs are a relic of the old ESXi setup and there’s a dedicated router VM that had all of the IPs allocated and used iptables to forward traffic around to the VMs accordingly.

----------------------------------------------- | VMware PUBLIC.IP.ADDRESS | | | | [ Router VM ] [ VM 1 ] | | WAN: PUBLIC.IP.ADDRESSES LAN: 10.2.0.11 | | LAN: 10.2.0.1 | | [ VM 2 ] | | LAN: 10.2.0.12 | -----------------------------------------------

“What’s wrong with that?”, you ask, “Seems as though it should work just fine. Add the LAN IP to the bridge, put the VMs on the bridge, give them LAN IPs and everything’s good.” Yeah, sure, if I don’t mind my WAN traffic and LAN traffic going through the same bridge interface. But I do mind. I mind very much.

Solution: Creating a second bridge for the internal network

After already having created a bridge for the public address, this part was simple. Add another netdev for the new bridge and a minimal configuration for its network.

[NetDev] Name=vmbr1 Kind=bridge

[Match] Name=vmbr1 [Network] Address=0.0.0.0

Rather than reboot, I tried a systemctl restart systemd-networkd to see if it’d pick up the bridge interface correctly and it did. One minor issue–systemd gave the bridge 10.0.0.1/255.0.0.0. Not that it matters in practice, but it was unexpected. Also at this point, I rebooted because I wanted to be sure everything came up working and it did.

Then it was just a matter of adding another network interface to the router VM manually and assigning it to vmbr1. It’s worth noting that you can’t issue a reboot to the VM and have it adopt configuration changes, it has to be stopped and started.

Epilogue

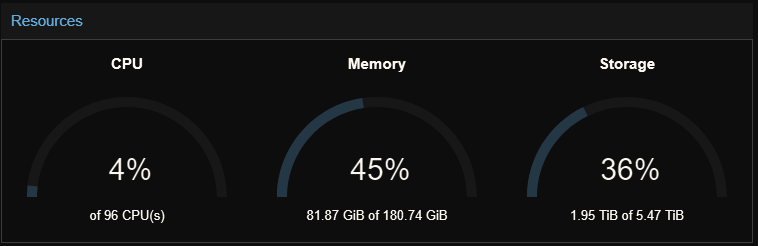

After that, I created the remainder of the VMs, added their old disk images, gave them network devices and everything was up and running just like before. Well, almost just like before–now I had better insight into my hardware.

One of the first things I did after updating the system was to install the RAID controller software and check the RAID status. One drive failed. Who knows how long that was going on? It was 1200 ATA errors deep at that point. Even though I’ve got great backups of the VMs (and their most recent disk images), a total storage failure would’ve been a much more stressful event. As it was, I reported the failed disk to OVH and they replaced it. Although annoyingly, they either pulled the wrong disk or shut the server down to do the swap despite me telling them which controller slot needed replacing. The RAID array is currently rebuilding and I’m running a shiny, new Proxmox instance. Feels good.